Energy of LLMs

#data #LLM #commentary

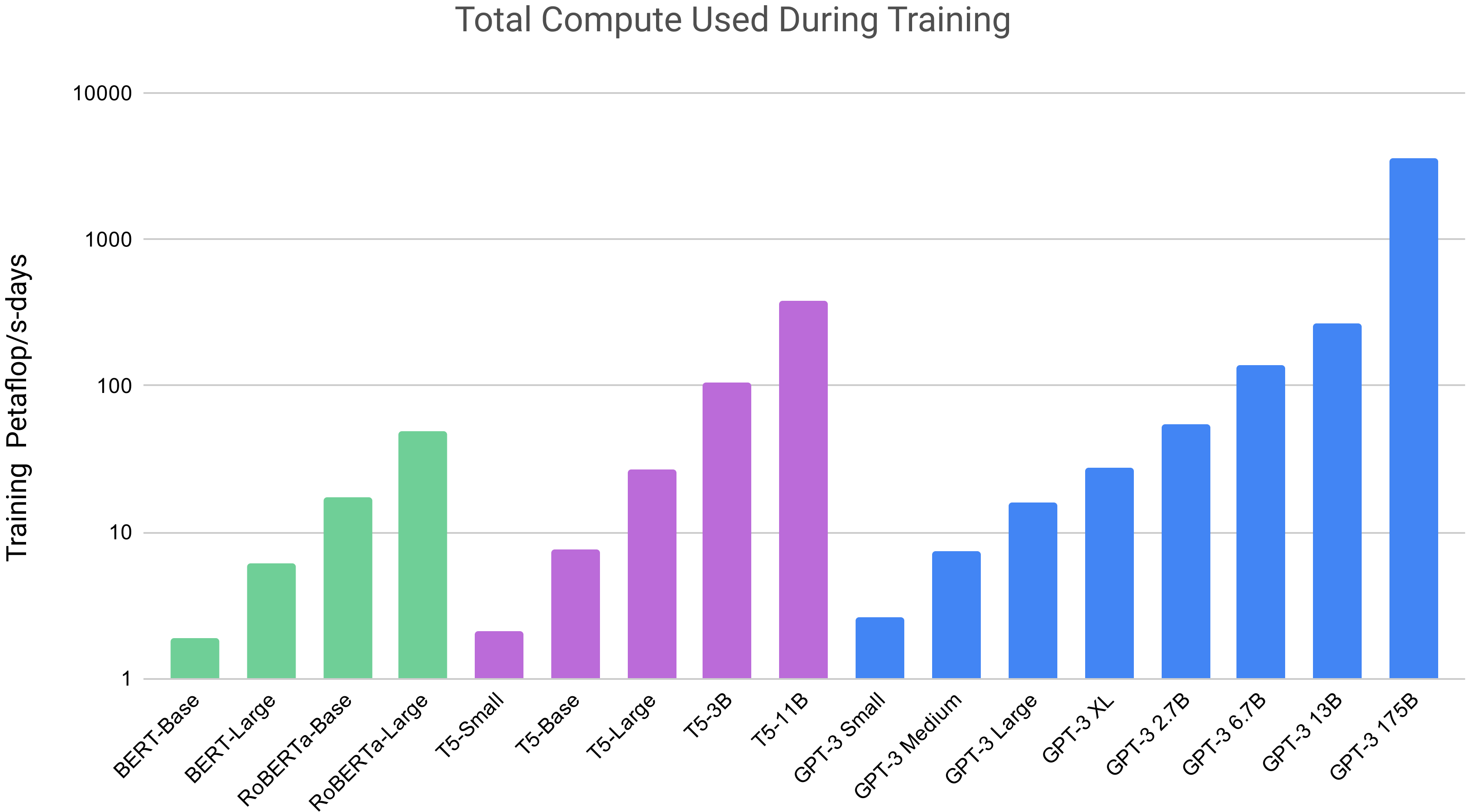

The rapid increase in model parameters and training data for LLMs has led to a significant rise in compute and power requirements. Let's delve into the compute and energy demands of LLMs by examining details from OpenAI's GPT-3 publication.

Compute needs are often expressed in petaflop/s-days. One petaflop/s equals one quadrillion floating-point operations per second, and "days" indicates the duration for which this performance is sustained.

Parameter and Compute Relationship

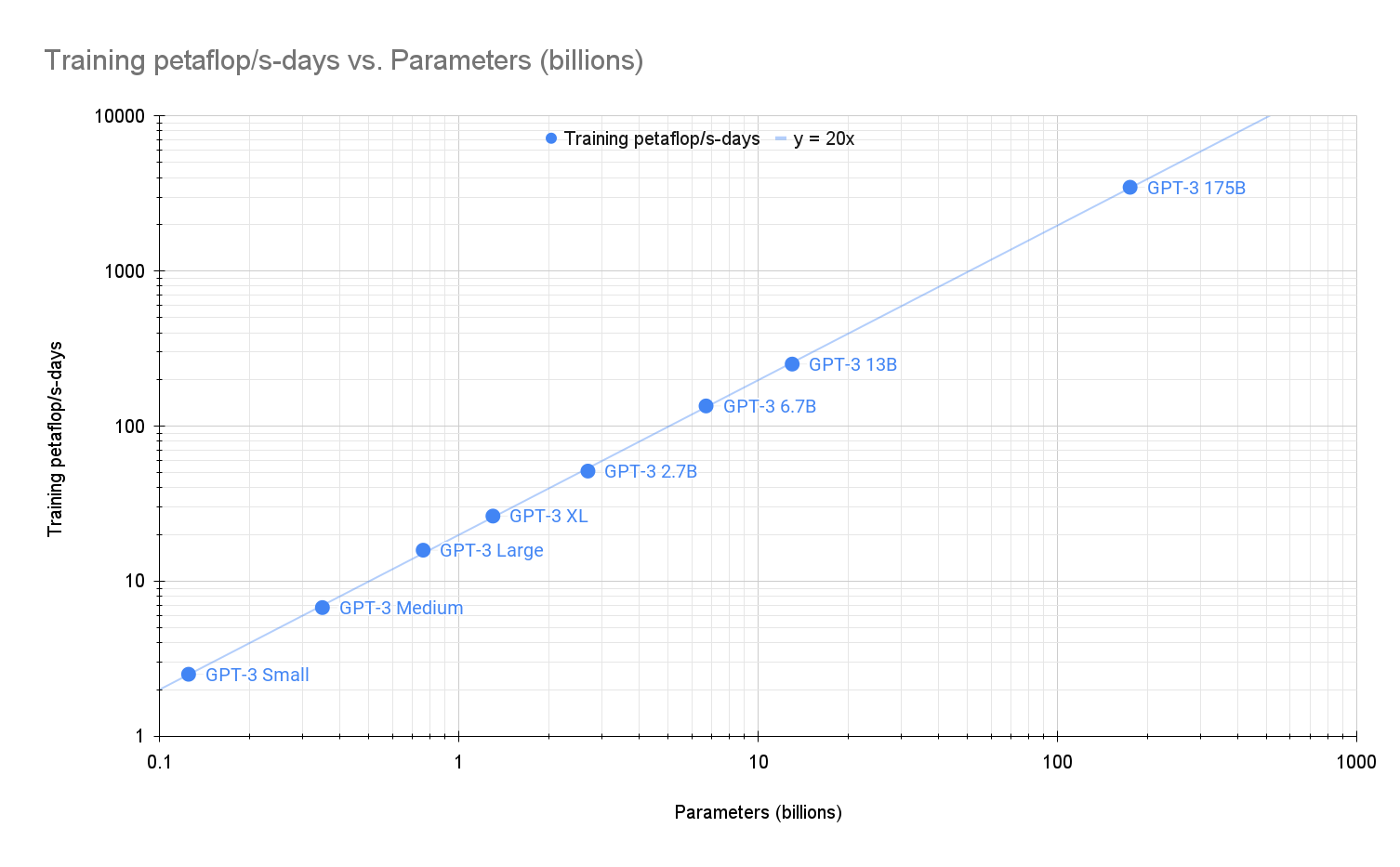

The GPT-3 paper details the parameters and compute used during training for eight different model sizes:

- GPT-3 Small - 0.125B

- GPT-3 Medium - 0.350B

- GPT-3 Large - 0.760B

- GPT-3 XL - 1.3B

- GPT-3 2.7B - 2.7B

- GPT-3 6.7B - 6.7B

- GPT-3 13.0B - 13.0B

- GPT-3 175.0B ("GPT-3") - 175.0B

The training compute can be interpolated from the graph above. For these GPT-3 models, every 1 billion parameter increase requires approximately 20 petaflop/s-days of compute. The commercial version of GPT-3, with 175 billion parameters, demands roughly 3470 petaflop/s-days of compute power.

Compute and Energy Resources

The world's fastest supercomputer, Frontier at Oak Ridge National Labs, cost $600 million to build and has a peak performance of 1.6 exaflop/s (1600 petaflop/s). Training GPT-3 on Frontier would take over 2 days of continuous computation: \( \frac{3470 \text{ petaflop/s-days}}{1600 \text{ petaflop/s}} = 2.2 \text{ days}\).

Frontier consumes 21 MW of power at peak usage, equating to about 1090 MWh of energy over 2.2 days. Considering the average U.S. household uses 10,800 kWh annually, training GPT-3 uses the same amount of energy as approximately 100 homes use in one year.

Conclusion

Although OpenAI has not disclosed details beyond GPT-3, estimates suggest GPT-4 has 1.76 trillion parameters. Assuming similar training efficiency, GPT-4 would require around 35,200 petaflop/s-days, 22 days on Frontier, and energy equivalent to over 1000 households. This highlights how the immense scale of current and future LLMs introduces significant compute and power challenges, unlike early LLMs. Companies are now focused on enhancing computational capabilities, exploring sustainable power options, and improving training efficiency to meet these demands.